ENGR 103 - Spring 2013

Freshman Engineering Design Lab

“Hands free computer interface”

Project Design Proposal

Date Submitted: April 12, 2013

|

Submitted to:

|

Dr. Fromm, fromme@drexel.edu

D.J. Bucci, djb83@drexel.edu

|

Group Members:

| |

Cheryl Tang, ct482@drexel.edu

|

Abstract:

Many people with limited hand use cannot fully use their computers. There are systems that use different trackers for mouse use, but they do not help very much with ease of use and different commands, which are better suited for keyboards. The goal of this design project is to facilitate computer use for paraplegics by integrating a voice command system which can allow users to speak various commands and to script a mouse that can be controlled by the facial muscles of the eyes and jaw. This will be a difficult system to implement, as the user will have to focus on how they move their eyes and jaw, and the integration of the different systems could have some problems with muscles that have different uses. In the end, the final product will include a set of electrodes and a microphone. The microphone will send signals to a MATLAB script, which will differentiate between different commands and will interface with the operating system of the computer in order to control the mouse and use different functions by voice command.

1 Introduction

Computers are constantly being updated for user-friendliness, but usually fail to take into account a certain audience, people with limited to no hand mobility. Controlling a mouse and navigating a computer is difficult for them. This audience includes quadriplegics, amputees, arthritis sufferers, and anyone who would prefer to give their hands a break.

We plan to create a hands-free way to interact with the computer. Our device will detect electrical signals produced from muscles and use them as a computer mouse. EMG signals from jaw muscles will control left/right clicking and EOG signals from the eye will control the movement of the cursor on-screen. To make navigating the computer even easier for the users, we will also implement voice recognition to allow access to programs and actions. This will include shortcuts to the Internet, zooming in/out, saving a document, etc.

Electrodes will have to be placed on the face, due to users’ lack of hand mobility. Studies on facial muscles around the eye and jaw will allow us to determine the best placement for the electrodes. We will also develop and adjust a speech-recognition algorithm in MATLAB. Testing and training will be needed for the program. This will involve repeating a single word into a microphone and capturing that speech. Incoming speech will be compared to this captured speech to determine what the user said.

Challenges include the electrodes being able to pick up the signals quickly enough so that the cursor is synchronized with the eye and jaw movement with little to no lag. The voice program will also need to recognize the user’s speech correctly. This poses a problem since users will not always say the same thing the same way.

We hope to allow the users to quickly and easily navigate their computer with only their eyes, jaw, and voice.

2 Deliverables

The goal is to produce a functional interface system capable of using facial muscles to control mouse movement and use of voice commands for shortcuts and for opening and closing different programs. The facial muscle movement will be recorded in MATLAB from electrode readings, and the muscle movements for looking in different directions will be associated with moving the mouse in that direction. The mouse left and right buttons will be controlled by clenching either side of the jaw when the cursor is placed where the user desires. The voice commands will be recorded by MATLAB initially, and compared to any voice input after the user opens their mouth, which will be determined by another electrode.

The list of functions that the code will execute include zooming in and out, saving documents, undoing or redoing operations, opening and closing different programs (with an option to add more), and others that will help the user navigate the interface easier.

The program will be tested by multiple individuals and in different circumstances in order to determine its viability in areas with different noise levels as well as determining how well the program can work with different people. At the end, it should work with the environment that the program is coded to, and any other conditions would show how versatile it is. These tests will be recorded as part of the final presentation.

3 Technical Activities

3.1 Electronics

Electrooculograph (EOG) technology is based upon the small charges created by movement of the eye. The eye has a small net positive charge at the cornea and a negative one at the retina. As the eye moves electrodes placed on the skin will be used to determine the amount of electrical activity of muscles in the face, which will be used to control the computer. The electrodes will be placed on the face to the side of the eye and bottom of the eye, and adjusted until the sensors are able to record data successfully. The electrical signal will need to be amplified, as the base potential difference is on the order of microvolts, in order to increase the signal strength so that it will be useful for MATLAB to delineate between smaller changes in charge. This will be accomplished by using an instrumentation amplifier connected to the electrodes on the face and a reference electrode, which will be placed on the forehead, where there will be no detectable charge from movement of the eyes. After the signal is amplified, it will be connected to a data acquisition board compatible with MATLAB. The electrodes provide the signal input for the mouse code and allow the user to passively control the computer with the mouse and buttons as they would control the mouse.

The mouse buttons will be controlled by Electromyograph (EMG) technology. This is based on the same principles as the EOG, but it uses the electrical signal from movement of skeletal muscles. For this application electrodes will be placed on the jaw muscles that control closing the jaw and opening the jaw on either side. These electrodes will also be connected to an amplifier, and will control the clicking of the mouse as well as the microphone activation for voice commands.

Figure 1: Electronics and Signal Acquisition

3.2 Mouse Coding

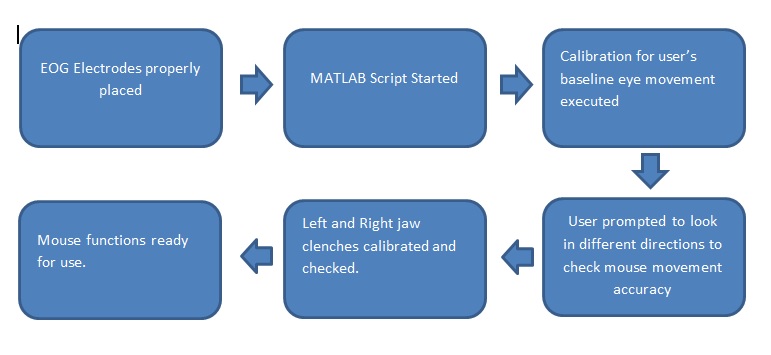

The MATLAB code will take the electrical signals from the muscle movement around the eyes and associate them to the movement of the cursor in on the computer. Each button on the mouse will be coded to clenching the respective side of the jaw when the cursor is properly placed. The MATLAB coding will consist of taking a baseline reading for the user’s EOG levels, and then doing a test run asking the user to look to different directions and having the program check, the signal input from the different electrodes with each action. Afterwards this will be checked against a new set of eye commands after the algorithm is trained to the signals from the user’s muscle movements. The signal levels of the electrodes placed on the jaw muscles will also be used to control the clicking action of the mouse and will need to be coded in and tested as well.

Figure 2. The above block diagram summarizes the initialization of the mouse script.

3.3 Voice Command Coding

The microphone will record user responses to different prompts for shortcuts or programs, including:

- “open/close *program* “ including Internet explorer, microsoft word, windows media player, iTunes, and will include an option to add other desired programs

- “save document”

- “Undo” or “redo”

- “Zoom in/out”

- “Stop voice commands”

- “Search for file”

These will allow the user to gain much of the functionality of the keyboard for shortcuts and improve the interface and ease of use.

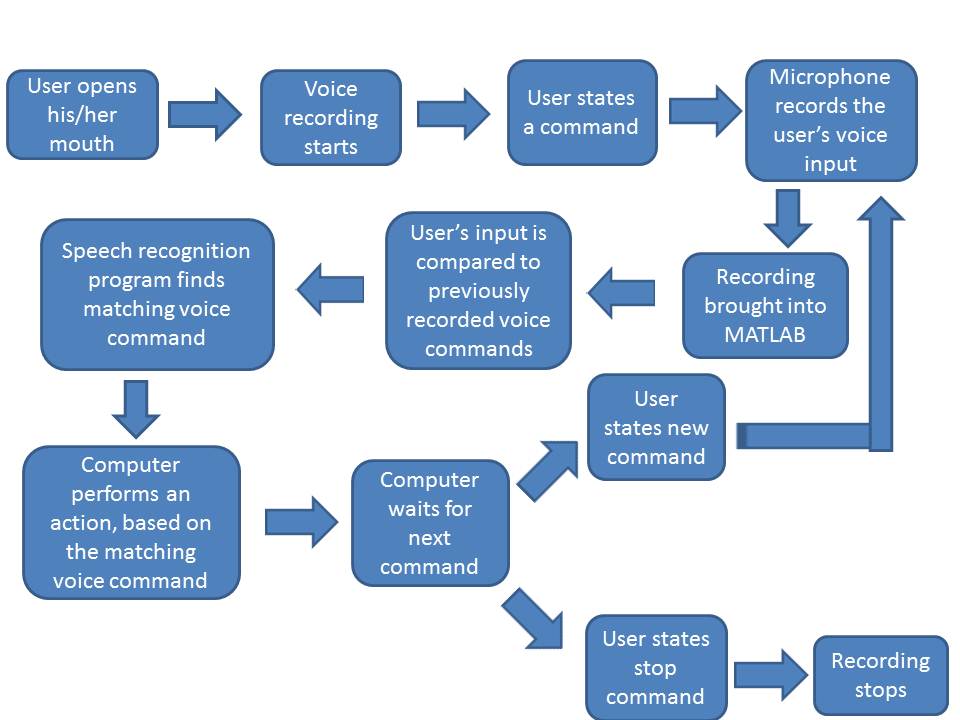

The responses to prompts will be stored and then compared to the different voice commands given by the user, and the command that is recognized by the speech recognition program will be activated through a section of code that can interact with the operating system. There has been some previous work with this detailed by Mathworks, the company that created MATLAB, which will be referenced, but it has not been implemented in conjunction with a hands free mouse control system. This will be one of the more complicated parts to code, and therefore will require a lot of work in order to get the program to recognize the different commands given by the user. In order to properly accomplish this, testing must be done to collect data of the sensor readings of each command. From here, a normalized range for each will be catalogued for reference. Admittedly, this will require a relatively high level of MATLAB coding, and as such the internet and other online resources will prove to be very useful.

Figure 3: Voice Command Recording and Implementation

4 Project Timeline

Week

| ||||||||||

Task

|

1

|

2

|

3

|

4

|

5

|

6

|

7

|

8

|

9

|

10

|

EMG/EOG and Speech study

|

x

|

x

| ||||||||

Mechanical design

|

X

|

x

|

x

|

X

|

x

| |||||

Electrical design

|

x

|

x

|

X

|

x

|

X

| |||||

Coding

|

X

|

x

|

X

|

x

| ||||||

System integration

|

x

|

X

|

x

| |||||||

Testing for cursor/voice

|

X

|

x

|

x

| |||||||

Final report preparation

|

x

|

x

|

x

|

Figure 4: Freshman design project timeline.

5 Facilities and Resources

The group will need time in the engineering labs to work with programming and testing the electrode system as well as the program as it progresses. In addition, some materials for the project may be borrowed from the engineering department, and are listed as such in the budget section. As the project consists nearly entirely of coding in MATLAB, facility and resource need will be minimal.

6 Expertise

- As stated, this project consists nearly entirely of coding in MATLAB. As such, strong proficiency in MATLAB is crucial. Much of the project is dependent on how well the program can refine the data inputs and respond to the user’s commands.

- Knowledge of circuitry necessary to connect electrodes to the amplifier and to assemble the circuit board.

- Knowledge of facial muscle structure, for accurate and proper placement of electrodes.

7 Budget

Category

|

Projected Cost

|

Electrodes

|

$27.99

|

Amplifier

|

$5.79 - $29.80

|

Circuit Board

|

$3.49

|

Data Acquisition Board

|

$0.00

|

TOTAL

|

$37.27- 61.28

|

Figure 5: Freshman design project budget.

7.1 EMG/EOG Electrodes

This is the most important part of the required physical materials. The EMG/EOG sensors will detect the electrical signals caused by muscle movement of the lips or jaw, which is then first imported into the Instrumentation Amplifier to be scaled to a readable level, then to MATLAB for processing.

7.2 Instrumentation Amplifier

The instrumentation amplifier is needed to amplify the EMG/EOG signals to a level suitable for data acquisition. This device amplifies the difference in voltage between inputs, allowing it to be properly read for data acquisition. This device will be needed relatively early in the project, preferably prior to week four.

Amplifier Subcomponent

|

Projected Cost

|

The instrumentation amplifier

|

$5.18

|

Stranded wire

|

$15.56 (if bought from Digikey) or $0 if (borrowed from Drexel)

|

Alligator clips

|

$8.45 (if bought from Digikey) or $0 if (borrowed from Drexel)

|

9V battery leads

|

$0.61

|

Total

|

$5.79 - $29.80

|

Figure 6: Amplifier budget

7.3 Circuit Board

The parts (amplifier, battery leads, and connectors for alligator clips) will be assembled on a printed circuit board, which will be obtained from the ECE department. Two separate circuit boards will be needed, one for EOG signals and the other for EMG signals. The signals will be filtered and amplified through the circuit board. By filtering, the printed circuit board removes low frequencies, such as the movements of electrodes and wires. This prevents the EOG and EMG signals from being corrupted by outside sources. The circuit board also connects and sends signals to the data acquisition board.

No comments:

Post a Comment